Declarative Feature Engineering at PayPal

Dec 07, 2023

20 min read

PayPal supports over 400 million active consumers and merchants worldwide. Every minute there are several thousand payment transactions. To prevent fraud in real-time at such a scale, we need to streamline our ML workflow and feature engineering processes to build strong predictors of behaviors and risk indicators. On top of that, it must be done with consistently predictable Time to Market (TTM) and sustainable Total Cost of Ownership (TCO).

What is declarative feature engineering?

While the declarative feature engineering term was first introduced by Zipline AirBnB in 2019, we have successfully used this paradigm in PayPal for the last decade, though we know it as config-based feature engineering. The idea is to allow data scientists to write a declaration of what their features look like rather than explicitly specify how to construct them on top of different execution platforms. In this way, the concerns of feature construction and execution are abstracted away from scientists so that engineers can worry about those complexities.

This post is the first in a series of two posts that will outline how the declarative feature engineering approach helps our engineers to address scale, TTM, and TCO requirements.

Let’s start with the definitions of metrics that measure our success.

TTM of the ML Feature

TTM of the Machine Learning (ML) feature is the length of time from the feature concept until it is released to production. Predictable TTM is a paramount need for overall AI maturation and business-first AI strategies.

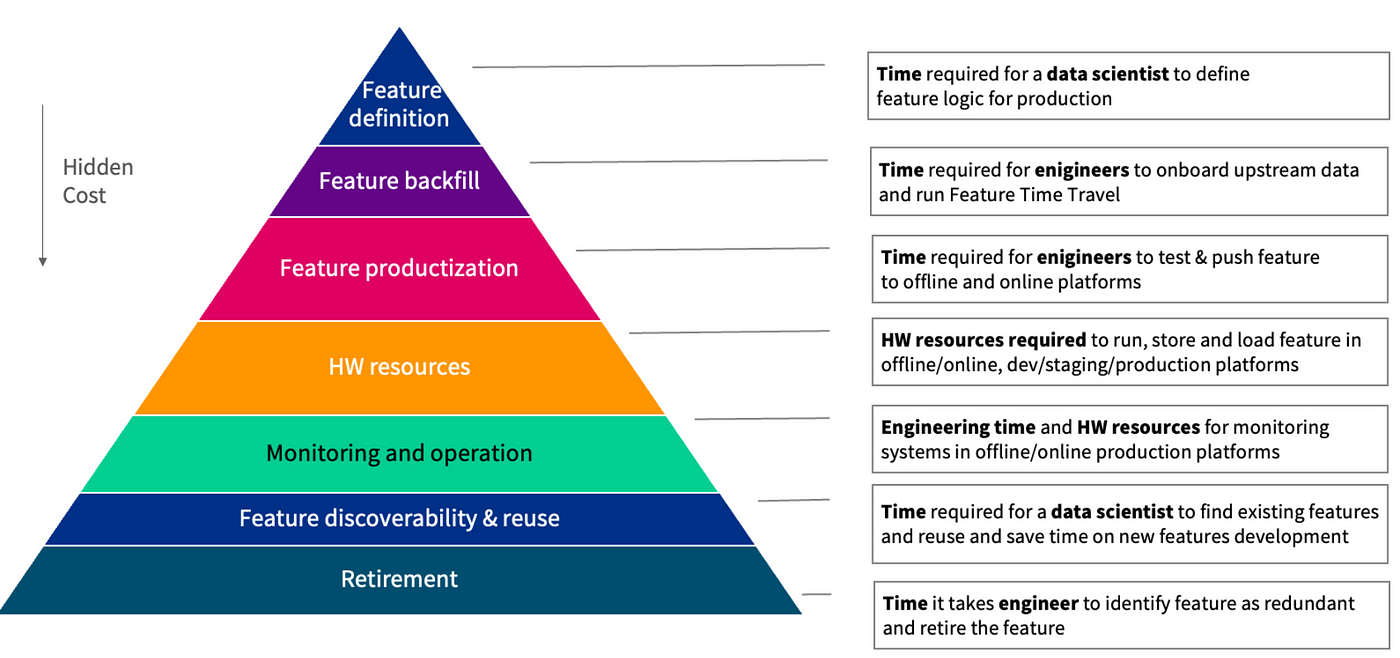

Cost of the ML Feature

To qualify and estimate TCO, we break down the cost of the feature as follows:

The main insight from the feature cost analysis is that we have to reuse existing features across the teams whenever possible. Otherwise, we just pay twice for every aspect.

To approach the above challenges, we separate features into three levels of complexity so we can define tailored strategies for scale, TTM, and TCO at every level.

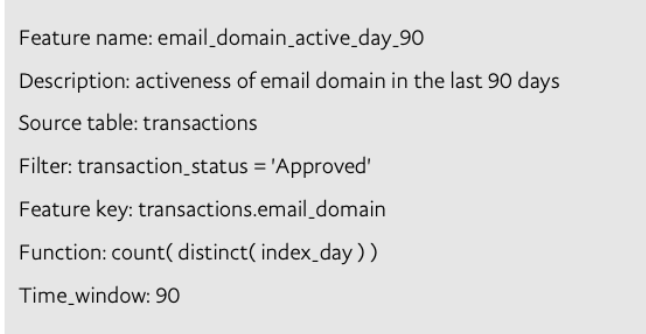

Simple features

Simple features account for the majority of the features, e.g. — roll-up aggregations (sum, count, average), and categorical features (marital status). For example:

Benefits include easier:

- Detection of similar declarative features and automatically recommend data scientists to reuse existing ones for better TTM & TCO

- Customization — for example, change the aggregation function or aggregation time window

- Estimation of upfront cost of features and evaluating return on the investment (ROI) even before it goes into production

- Maintenance (TCO) due to standardized implementation

Code-based features

For more complex analytics, we plan for side-by-side development to reduce the number of iterations between data scientists and software engineers. However, it’s more challenging to:

- Enforce standards and best practices

- Interpret and customize to reuse these features for other use cases

- Backfill historical data for the model training

- Maintain these features in production

Analytical platforms for feature generation

For state-of-the-art feature generation, we develop graph of customers, Natural language processing (NLP), and anomaly detection analytical platforms.

These platforms, however, are the most difficult to customize and maintain due to their high complexity.

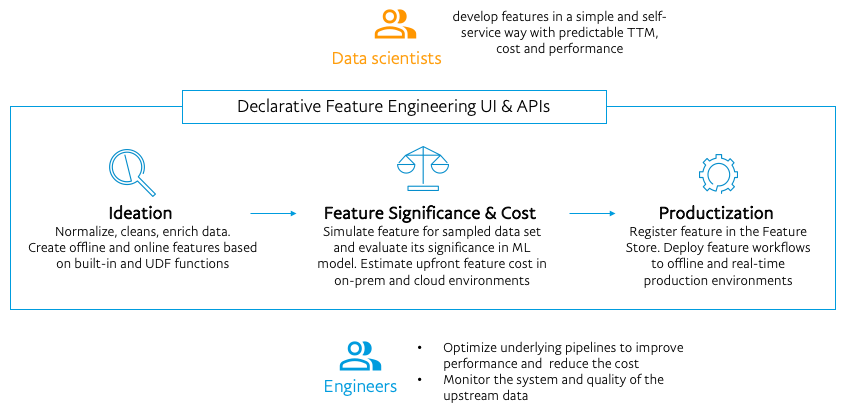

Self Service tool for declarative feature engineering

Simple features are the perfect fit for the declarative feature engineering paradigm. Data scientists can write a declaration of what their features look like rather than explicitly specify how to construct them on top of different execution platforms: batch/near-real-time or real-time, which are standard compute platforms in the industry

We provide data scientists with UI and APIs to specify logic in a declarative way, simulate (aka Time Travel for feature), and productize features without direct support from engineers. This drastically reduces project planning overhead by reducing timeline dependencies, thus improving TTM.

Behind the scenes, the system is responsible for:

- Generate and optimize all the underlying data pipelines to reduce TCO.

- Enforce Time Travel style in feature implementation so that features can be computed for any Point in Time (PiT) of historical data. As a result, data scientists can run backfill for the feature on a self-service basis for any period of time.

- Register features to the Feature Store for re-use by other data scientists.

- Track TTM metrics and identify potential areas of improvement.

- Enforce engineering standards and best practices for easier support and maintenance.

Conclusion

The declarative feature engineering paradigm has proven to be very effective in simple feature development at scale with a predictable Time to Market and sustainable Total Cost of Ownership. We put our focus on self-service and automation for declarative features which allow us to free up engineers’ precious time to concentrate on state-of-the-art next-generation AI projects.

In this post, we outlined our solution’s functionality, and in the subsequent post, we will do a deep dive into the technical aspects. Stay tuned!