Scaling Kafka to Support PayPal’s Data Growth

Sept 07, 2023

61 min read

Apache Kafka is an open-source distributed event streaming platform that is used for data streaming pipelines, integration, and ingestion at PayPal. It supports our most mission-critical applications and ingests trillions of messages per day into the platform, making it one of the most reliable platforms for handling the enormous volumes of data we process every day.

To handle the tremendous growth of PayPal’s streaming data since its introduction, Kafka needed to scale seamlessly while ensuring high availability, fault tolerance, and optimal performance. In this blog post, we will provide a high-level overview of Kafka and discuss the steps taken to achieve high performance at scale while managing operational overhead, and our key learnings and takeaways.

Kafka at PayPal

Kafka was introduced at PayPal in 2015, with a handful of isolated clusters. Since then, the scale of the platform has increased drastically, as PayPal’s payment volumes have grown significantly over the last five years. At PayPal, Kafka is used for first-party tracking, application health metrics streaming and aggregation, database synchronization, application log aggregation, batch processing, risk detection and management, and analytics and compliance, with each of these use-cases processing over 100 billion messages per day.

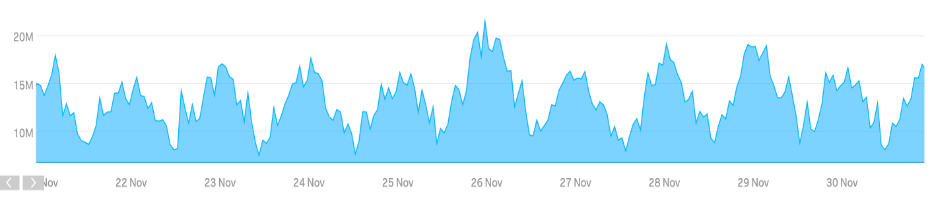

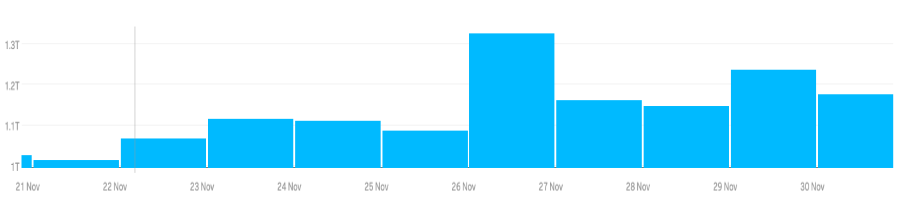

Today, our Kafka fleet consists of over 1,500 brokers that host over 20,000 topics and close to 2,000 Mirror Maker nodes which are used to mirror the data among the clusters, offering 99.99% availability for our Kafka clusters. During the 2022 Retail Friday, Kafka traffic volume peaked at about 1.3 trillion messages per day! At present, we have 85+ Kafka clusters, and every holiday season we flex up our Kafka infrastructure to handle the traffic surge. The Kafka platform continues to seamlessly scale to support this traffic growth without any impact to our business.

Retail Friday 2022: 21 million/sec

Cyber Monday 2022: 19 million/sec

Retail Friday 2022: 1.32 trillion / day

Cyber Monday 2022: 1.23 trillion / day

Operating Kafka at PayPal

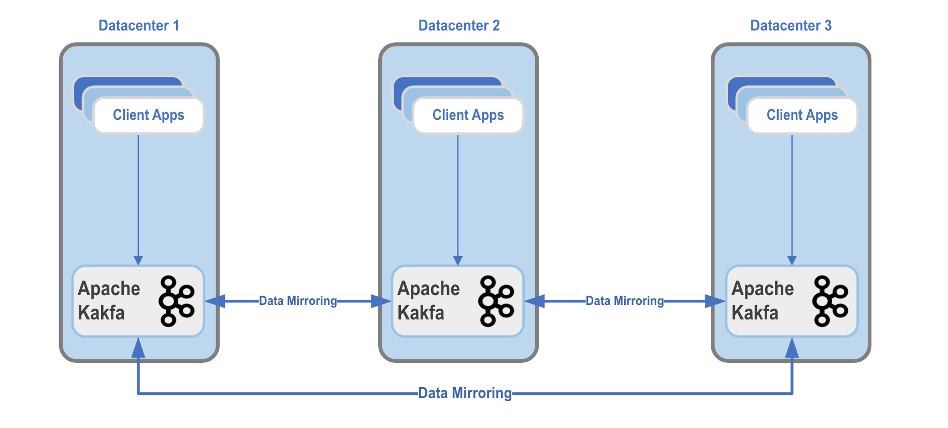

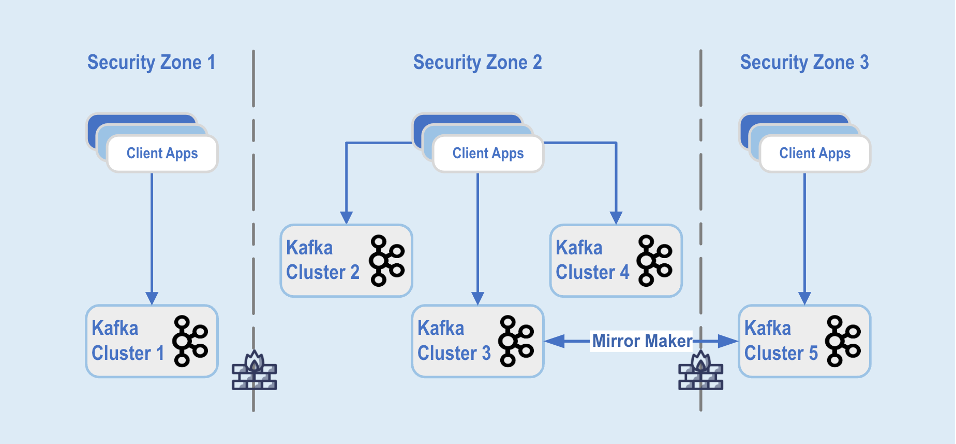

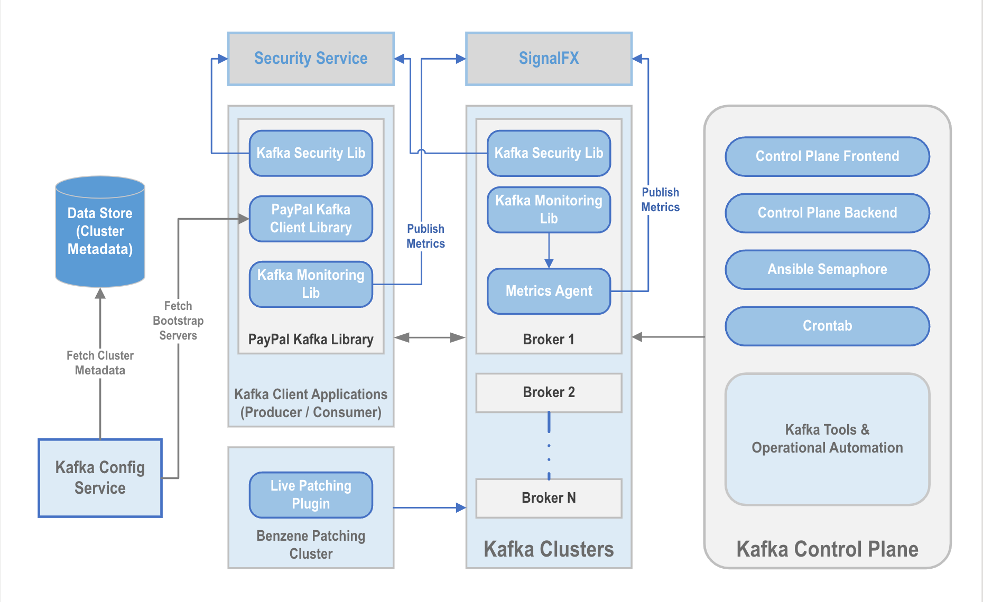

PayPal infrastructure is spread across multiple geographically distributed data centres and security zones (Fig 3 and 4 below). The Kafka clusters are deployed across these zones, based on data classification and business requirements. MirrorMaker is used to mirror the data across the data centers, which helps with disaster recovery and to achieve inter-security zone communication.

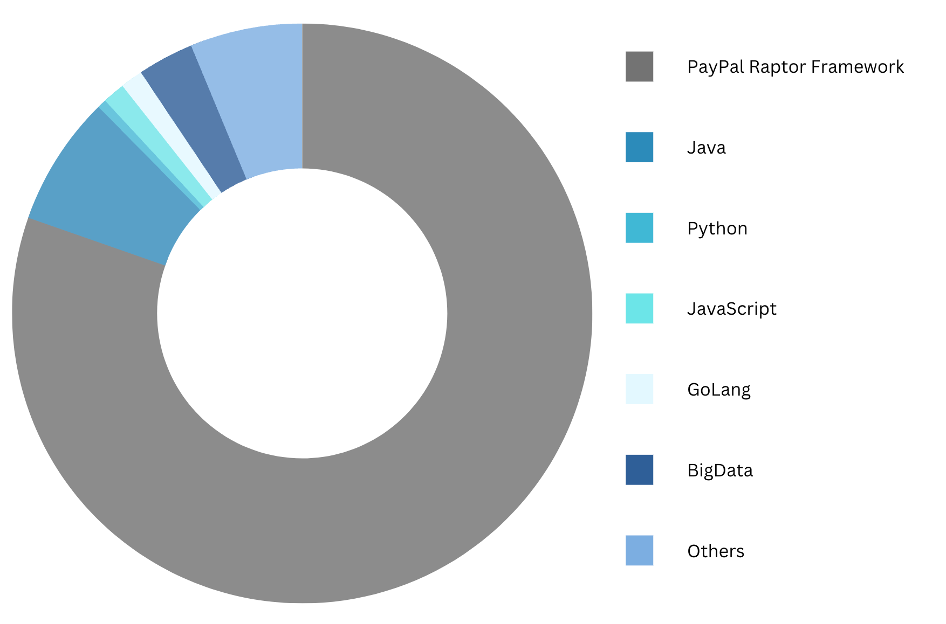

Each Kafka cluster consists of brokers and zookeepers. Client applications connect to the topics hosted on these brokers to publish or subscribe the data to/from a cluster in the same or different Security Zone (via MirrorMaker). At PayPal, we support a wide array of clients such as Java, Python, Spark, Node, PayPal internal frameworks, Golang and more.

Operating Kafka at PayPal comes with its own set of challenges. With multiple different frameworks, tech-stacks, legacy applications, and internal/external products we interact with, we need to keep evolving and investing in building robust tools which help us to reduce and manage the operational overhead. Over the years, we have invested in a few key areas that have helped us manage our ever-growing fleet of Kafka clusters to have simple client integration, better dependency management, and consistently release new features and enhancements for security and platform stability. Some of the areas that we have invested include:

- Cluster Management

- Monitoring and Alerting

- Configuration Management

- Enhancements and Automation

Cluster Management

To have better control over Kafka clusters and reduce operational overhead, we have introduced a few improvements, including:

- Kafka Config Service

- ACLs

- PayPal Kafka Libraries

- QA Environment

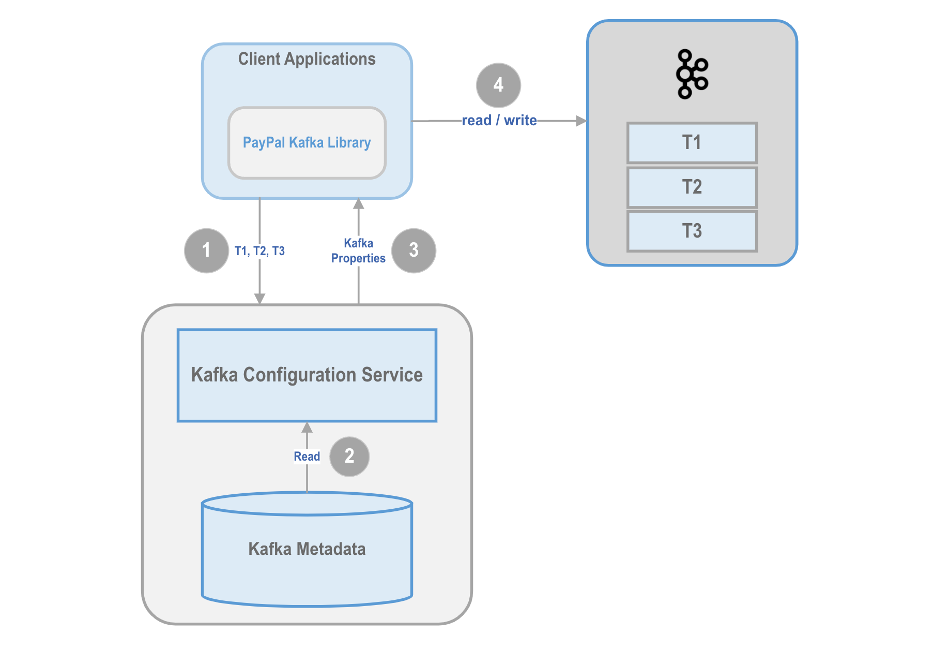

Kafka Config Service

Before Kafka config service came into existence, clients would hardcode the broker IPs to which they connect to. In case of any maintenance activity, we might need to replace the brokers due to various reasons such as upgrades, patching, disk failures, etc. In this scenario, it caused a maintenance nightmare with multiple incidents for us to handle. Another issue is the lack of understanding with multiple configurations Kafka provides and what it does, due to which the Kafka clients would override these properties without the know-how on the implications of the same, this resulted in a lot of noise and impacted engineering bandwidth in terms of product support.

The Kafka config service is one of the most critical, highly available stateless services, implemented to reduce considerable operational overhead for the Site Reliability Engineering (SRE) and the application teams. Kafka clients need a set of bootstrap servers during initialization, which are the set of brokers on which the topics are hosted, and application connects to the same brokers for producing or consuming the messages. This service pushes the standard configuration to all the clients using Kafka, along with providing the necessary bootstrap server details, at the start of the application. In case of any issues, the application teams do not have to rely and spend time on follow-ups with the platform team but can simply restart their application to incorporate any recent changes.

Benefits

- Offers a plug-and-play model for Kafka clients

- Reduces operational and support overhead

- Helps in standardizing the Kafka configuration across multiple different clients

Kafka ACLs

Before Access Control Lists (ACLs) came into the picture, Kafka allowed connections on plain text port and any PayPal application could connect to any of the existing topics, which was an operational risk for our platform which streams business critical data.

ACLs were introduced at PayPal, to ensure controlled access to Kafka clusters and make our platform secure by enabling access only via SASL port. This requires applications to authenticate and authorize in order to gain access to the Kafka clusters and topics. Each application needs to explicitly call out if this is a producer or consumer for the topic it wants to connect to. We have incorporated these security changes in our client library.

Benefits

- A highly available and secure platform offering low latency for our business-critical workflows

- Record of every application accessing topics and clusters, and their intent

- We have implemented ACLs across all the Kafka clusters using a unique application context assigned to every PayPal application for identification. This has reduced maintenance effort dramatically.

PayPal Kafka Libraries

At PayPal, we need to ensure our clusters and clients connecting to them operate in a highly secure environment. Additionally, we need to ensure easy integration to multiple frameworks and services within PayPal to provide a seamless experience to our end users, with reduced time to onboard and enhanced focus on developer efficiency. To do this, we have introduced our own set of libraries, of which the major ones and their importance are highlighted below.

Resilient Client Library

The resilient client library integrates with our discovery service, without developers needing to know the implementation or configuration details. Once the client tries to establish connectivity to the cluster, this library will fetch the information on Kafka brokers, the default or overridden configuration for producer or consumer application at start up and more.

Benefits

- Topic portability within the Kafka clusters as well as different environments

- Reduces the impact to business with operational changes

- Developer efficiency

Monitoring Library

The monitoring library publishes critical metrics for client applications, which helps us to monitor the health of end user applications. We use Micrometer to publish these metrics, which allows us to change the metrics and the way we monitor our platform.

Benefits

- Application can set alerts and be notified in case of any issues

- Helps us to monitor and keep Kafka Platform stable

- Action on any issues within a few minutes of turnaround time

Kafka Security Library

All the applications should be SSL (Secure Sockets Layer) authenticated to connect to the Kafka clusters. This library pulls the required certificates and tokens to authenticate the application during the startup.

Benefits

- Kafka platform at PayPal supports more than 800 applications, this library avoids overhead for the end users with respect to Key management events such as Certificate updates, Key rotations, etc.

- Requires minimal know-how on authentication and authorization when connecting to Kafka clusters.

Kafka QA Platform

As Kafka evolved, we observed the need for a stable, highly available production-like QA platform for our developers to confidently test their changes and go-live. The older environment was cluttered with a lot of ad-hoc topics, all hosted on a handful of clusters, and with feature disparity compared to production setup. Developers would create random topics for their testing, which would cause issues while rolling out the changes to production as the configuration or the cluster / topic details would be different from the one used for testing.

We redesigned and introduced a new QA platform for Kafka users to resolve the above-mentioned issues. The new QA platform provides one-to-one mapping between production and QA clusters, following the same security standards as production setup. We have hosted all the QA clusters and topics in Google Cloud Platform, with the brokers spread across multiple zones within GCP with rack aware feature to achieve high availability. Along with a rigorous evaluation for finding the right SKU without compromising performance, we optimized the public cloud costs. Compared to the older platform, our new platform reduces the cloud costs by 75% and improves the performance by about 40%.

Benefits

- Reduces time to rollout the changes from QA to production

- One-To-One mapping with production clusters

- Cloud optimized solution

Monitoring and Alerting

One of the challenges with operating at scale is how efficiently we monitor our platform, detect any issues and generate the alerts to action upon them. The Kafka platform is heavily integrated into the monitoring and alerting systems of PayPal. Apache Kafka provides tons of metrics out of the box. But we have fine-tuned a subset of metrics that helps us identify issues faster with lesser overhead. Each of our brokers, zookeepers, MirrorMakers and clients sends these key metrics regarding the health of the application as well as the underlying machine. Each of these metrics has an associated alert that gets triggered whenever abnormal thresholds are met. We also have clear Standard Operating Procedures for every triggered alert which makes it easier for the SRE team to resolve issue. This plays a major part in operating a platform at the scale.

We also have our own Kafka Metrics library which filters out the metrics. These metrics are registered in Micrometer registry and are sent to SignalFX backend via SignalFX agent. SignalFX agent runs on all our brokers, Zookeepers, MirrorMakers and Kafka clients.

Notable Open-Source contributions

We have open source contributed KIP-351, KIP-427 and KIP-517

- KIP-351 helped increase visibility of under min in-sync-replicas

- KIP-427 helped monitor the cluster that had any at-min-in-sync-replicas

- KIP-517 helped monitor the user polling behavior in consumer applications

Configuration Management

Configuration Management helps us to have complete information on our infrastructure. In case of loss of data, this is our single source of truth, which helps us to rebuild the clusters in a couple of hours. Entire Kafka infra-metadata such as details on topics, clusters, applications, etc. are stored in our internal configuration management system. The metadata stored is backed up frequently to ensure we have the most recent data.

Benefits

- Serves as source of truth in case of any conflicts

- Re-creating clusters, topics, etc. in case of recovery

Enhancements and Automation

As with any large-scale system, we have built the tools to quickly carry out operational tasks. We have automated CRUD operations for clusters and topics, metadata management, patching our infra and deploying changes. Some of them are highlighted below in further detail.

Patching security vulnerabilities

We use BareMetal (BM) for deploying the Kafka Brokers and Virtual machines (VM) for Zookeeper and Mirrormakers in our environment.

All hosts within the Kafka platform need to be patched at frequent intervals of time to resolve any security vulnerabilities. This patching is performed by our internal patching system.

The Kafka topics are configured with a replica set of three. Patching would normally require BM restarts which could cause under replicated partitions depending on the patching time. If consecutive brokers were restarted, then it could cause data loss. Therefore, we built a plugin to query the under replicated partition status before patching the host. This would ensure each cluster can be patched in parallel but only a single broker is patched at a time with no data loss.

Security Enhancements

Apache Kafka prior to version 2.6.0, only supported loading certificates by reading it off the filesystem. Therefore, we open source contributed KIP-519 that helped make the SSL context/engine extensible. This helped Kafka brokers and the clients adhere to the PayPal Security standards.

Operational Automation

Listed below are the key automations in place, which helps us to manage our operations on a day-to-day basis.

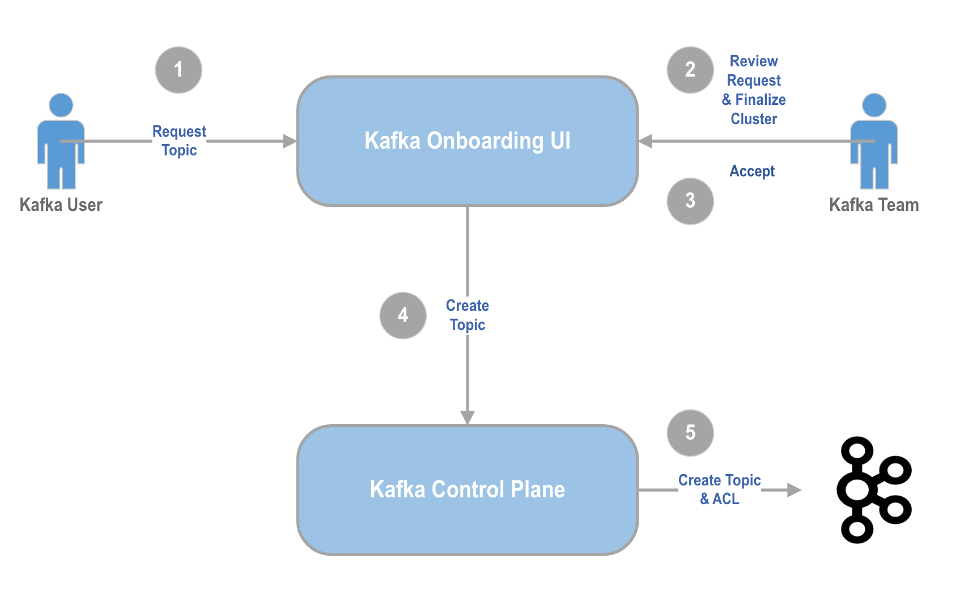

Topic Onboarding

Application teams are required to submit a request to onboard a new topic via the Onboarding Dashboard. The capacity requirements for the new topic are reviewed by the team and onboarded to one of the available clusters. The availability of clusters is determined using our capacity analysis tool, which is integrated into the onboarding workflow. For each application being onboarded to the Kafka System for the first time, a unique token is generated and assigned. This token is used to authenticate the client’s access to the Kafka Topic. Followed by creating ACLs for the specific application and topic based on their roles. Now the application can successfully connect to Kafka topic.

MirrorMaker Onboarding

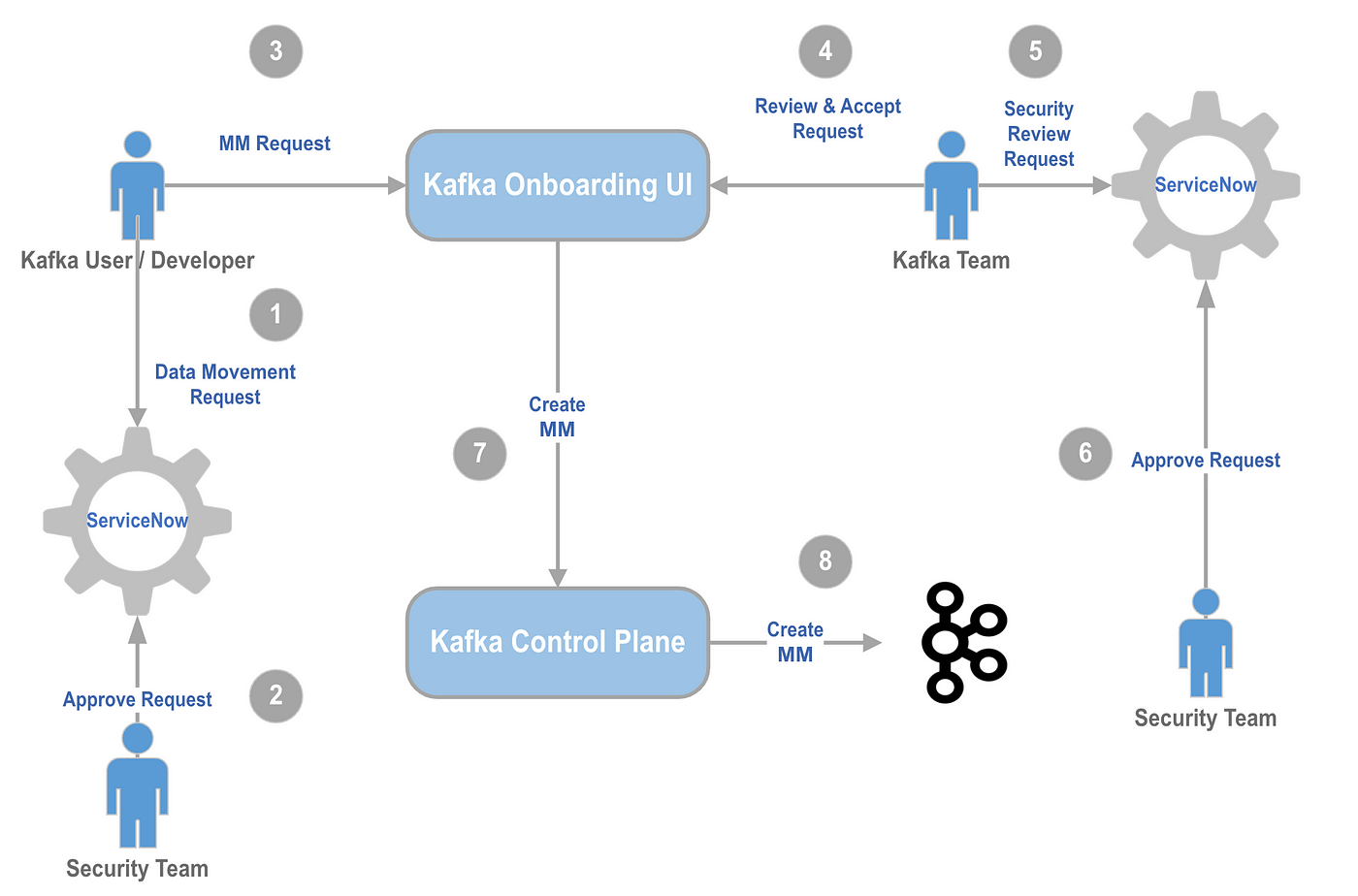

We have Mirrormakers to mirror the data from source cluster to destination cluster for specific use cases where the producer or consumer applications are running in different zones. In such cases, we set up Mirrormakers by following the process as shown in the diagram.

Repartition Assignment Enhancements

Partition re-assignment is the process of redistributing or reassigning the partitions of a topic among the available brokers or nodes in a Kafka cluster. This is required when we flex up the Kafka brokers, carry out any maintenance activity on a subset of brokers or while replacing the brokers. By default, Kafka does the repartition for all the partitions including the partitions which are hosted on healthy brokers. But we have made modifications to re-assign only under replicated partitions hosted on the affected brokers. This enhancement helps avoid unpredictably longer times for re-partitioning. In the past, re-partitioning without this optimization made clusters unavailable for durations that extended to a couple of days depending on the volume of the data flowing into the clusters and severely impacted availability.

Key Learnings

- Operating Kafka on a large scale requires tooling which helps with regular operations such as cluster installation, topic administration, patching security vulnerabilities, etc. We are continuously working on building and improving our existing automation and tools to reduce the operational overhead as well as reduce the manual work.

- Alerting and monitoring important health metrics are extremely critical for the infrastructure to be highly available and to avoid any impact to the business. Along with monitoring the metrics such as bytes in, bytes out, CPU utilization, disk utilization, etc., we need to constantly work towards fine tuning our metrics to ensure we receive actionable alerts.

- Initially due to limited engineering capacity, our focus was to build tools to onboard multiple applications and use-cases to the Kafka Platform. Over time, this resulted in us losing track of the applications, which severely impacted us while carrying out maintenance activity. We introducted ACLs in order to have better know-how on the applications connecting to our platform and at the same time adds to improving the security of our platform. To avoid managing ACLs becoming an overhead, we are working towards building tools which can automate the manual effort.

- Benchmarking the cluster performance on multiple environments (on-prem / public cloud) with different configurations helped us to gain insight and achieve operational efficiency, both in terms of performance and cost.

Conclusion

The Kafka platform at PayPal since its inception has always sought to provide its end users with a seamless way to integrate and stream the data with the least amount of friction and the highest availability. We strive to build the necessary tools to ensure our platform seamlessly scales to handle increasing data traffic. As we do this, we learn every day, discovering innovative ways to enhance our platform and improve our ability to provide an amazing user experience.

We hope this was an interesting read and would love to hear any thoughts or feedback.

Acknowledgement

The success of the Kafka platform at PayPal has been a massive team effort. A lot of people have contributed over the years to help make the Kafka platform at PayPal become highly available and stable. We would like to thank

,

,

,

,

,

,

, Sowmia Gopinadhan, Maulin Vasavada, Kevin Lu, Na Yang, Thomas Zhou, Sanat Yelchuri, Renuka Metukuru, Lei Huang, Suresh Balasubramanian, Rajesh Alane, Ying Wang, Ravi Singh, Santosh Singh, Anh Tran and Shiyao Wu for their contributions. Over the years this team has greatly benefited from the mentorship, support and sponsorship from our leaders (past and present): Omkar Pendse, Doron Maimon, Srikrishna Gopalakrishnan and

.