Advanced Caching Strategies for Faster PayPal Checkout Integrations

Nov 17, 2025

10 min read

Slow checkout experience can cost businesses potential sales, making performance optimization critical for checkout integrations. Effective caching strategies reduce API response times, minimize server load, and create seamless payment flows that convert browsers into buyers. This guide explores seven proven caching approaches that help developers build faster, more reliable checkout experiences while maintaining security and data integrity. From local caching for immediate access to sophisticated distributed systems for enterprise-scale operations, these strategies address the full spectrum of performance optimization needs in modern e-commerce environments.

1. PayPal Local Caching

Local caching stores frequently accessed data directly on the same machine as your application, delivering the faster possible data retrieval by eliminating network overhead entirely. This approach works exceptionally well for checkout integrations handling moderate traffic volumes or requiring ultra-low latency for specific operations.

The primary advantage of local caching lies in its speed. Data access occurs at memory speeds without network delays. This makes it ideal for storing the configuration data, merchant settings, or frequently referenced product information during checkout flows. However, local caches face inherent limitations in size and cannot be shared across multiple application instances or servers.

According to caching performance research, local caching offers the faster data access but remains limited in size and cannot be shared across nodes. This constraint makes it most suitable for single-server deployments or specific high-frequency lookups that don't require cross-system synchronization.

| Cache type | Speed | Scalability | Complexity | Best Use Case |

| Local | Fastest | Limited | Low | Single server, high frequency data |

| Distributed | Fast | High | Medium | Multi-server shared data |

| Edge | Variable | Highest | High | Global Content Delivery |

2. PayPal Distributed Caching

Distributed caching systems store cached data across multiple networked nodes, enabling horizontal scaling and shared data access across your entire checkout integration infrastructure. This approach becomes essential when handling high-traffic commerce environments where multiple application servers need consistent access to the same cached information.

Unlike local caching, distributed systems allow multiple servers to share cached API responses, user session data, and transaction information. This shared access ensures consistency across your application while providing fault tolerance so that if one cache node fails, others continue serving requests without interruption.

The trade-off involves increased network latency compared to local caching, as data must travel between cache nodes and application servers. However, distributed caching research shows these systems span multiple nodes, offering scalability benefits that far outweigh the modest latency increase for most high-volume applications.

3. PayPal Edge Caching with CDNs

Edge caching positions cached resources on geographically distributed servers, serving users from the nearest location to minimize latency and improve global checkout performance. This strategy proves particularly valuable for static JavaScript libraries, checkout button assets, and other resources accessed by customers worldwide.

Content delivery networks (CDNs) implement edge caching by maintaining copies of your PayPal integration assets at multiple geographic locations. When a customer initiates checkout, they receive scripts and resources from the nearest edge server rather than your origin server, significantly reducing load times and improving user experience.

While edge caching excels at reducing global latency, it introduces complexity in cache invalidation and content updates. As noted in caching strategy analysis, placing cache at the edge cuts lag but complicates global invalidation processes.

Configure your CDN to cache static PayPal SDK files, images, and stylesheets while ensuring dynamic checkout elements bypass edge caching. Use appropriate cache-control headers to balance performance with freshness requirements, particularly for PayPal's frequently updated JavaScript libraries.

4. PayPal Cache-Aside (Lazy Loading)

Cache-aside, also known as lazy loading, gives your application complete control over cache management by checking the cache before querying the primary data source. If data exists in the cache, it's returned immediately; if not, the application fetches from PayPal's APIs and stores the result for future requests.

This strategy works exceptionally well for the API responses that don't change frequently, such as merchant account information, payment method configurations, or product catalog data. The application maintains full control over what gets cached and when, allowing for sophisticated caching logic tailored to specific business requirements.

Performance optimization studies indicate that lazy loading reduces unnecessary cache storage by only caching data on demand, though it may occasionally serve stale data if not properly managed.

The cache-aside implementation follows a predictable pattern:

1. Check cache for requested PayPal data

2. If found, return cached response immediately

3. If not found, query PayPal API directly

4. Store API response in cache for future requests

5. Return data to the requesting application

This approach works particularly well for payment method details, shipping calculations, and merchant configuration data that remains relatively stable throughout checkout sessions.

5. PayPal Write-Through Caching

Write-through caching maintains perfect data consistency by updating both the cache and primary data store simultaneously whenever data changes. This strategy ensures cached PayPal information always reflects the most current state, eliminating concerns about stale data affecting checkout processes.

When implementing write-through caching for PayPal integrations, every update to transaction status, user preferences, or payment information triggers immediate cache updates alongside database writes. This approach guarantees data freshness but may slow write operations due to the dual update requirement.

According to caching consistency research, write-through systems update cache immediately when source data changes, ensuring freshness while potentially impacting write performance.

Write-through caching works exceptionally well for:

- Transaction status updates during PayPal checkout flows

- User payment preferences and saved methods

- Real-time inventory levels affecting payment options

- Checkout session information requiring immediate consistency

| Aspect | Benefit | Trade-off |

| Consistency | Current Data | Slower writes |

| Reliability | No cache misses | Increased complexity |

| Performance | Fast reads | Write latency |

6. PayPal Eventual Consistency Caching

Eventual-Consistency-Caching accepts temporary differences between cached and source data, allowing systems to synchronize over time rather than immediately. This approach prioritizes system scalability and performance over perfect real-time accuracy, making it suitable for specific PayPal integration scenarios.

Use eventual consistency for non-critical PayPal data where slight delays in updates won't impact checkout functionality. Examples include analytics data, reporting information, promotional content, or notification systems where perfect real-time accuracy isn't essential for business operations.

This strategy excels in distributed environments where immediate consistency across all nodes would create performance bottlenecks. Instead of forcing synchronous updates, eventual consistency allows cache nodes to update independently and converge on the correct state over time.

Configure cache refresh intervals based on your business requirements. Critical data may refresh every few minutes, while reporting data could update hourly or daily. Monitor cache hit rates and data freshness to optimize refresh timing for your specific integration needs.

The scalability benefits become particularly apparent in high-traffic scenarios where immediate consistency would require expensive distributed locking mechanisms or complex coordination protocols that could slow down checkout processes.

7. PayPal Cache Invalidation Strategies

Cache invalidation removes or updates cached entries when underlying data changes, preventing stale information from disrupting the checkout flows or creating inconsistent user experiences. Effective invalidation strategies balance data freshness with system performance.

Cache management research emphasizes that cache invalidation updates or clears cached data when the underlying source changes, making it critical for maintaining data accuracy in payment systems.

Key invalidation methods include:

· Time-to-Live (TTL) Expiration: Set automatic expiration times for cached PayPal data based on how frequently it changes. Use shorter TTL values for dynamic data like inventory levels and longer periods for stable configuration information.

· Event-Driven Invalidation: Trigger cache updates when specific PayPal events occur, such as payment status changes, user profile updates, or merchant configuration modifications.

· Manual Invalidation: Provide administrative controls for immediately clearing specific cache entries during maintenance, troubleshooting, or emergency situations.

· Write-Invalidate: Automatically clear related cache entries whenever underlying data changes, ensuring users never see outdated information during checkout processes.

For integrations, focus invalidation efforts on transaction statuses, user session data, and payment method information where stale data could cause checkout failures or security concerns.

Client-Side vs Server-Side Caching for checkout Integrations

Understanding the distinction between client-side and server-side caching helps developers choose the right approach for different aspects of PayPal integrations while maintaining security and compliance requirements.

Client-side caching stores data in users' browsers, making it ideal for static assets like PayPal JavaScript libraries, images, and stylesheets. This approach reduces server load and improves perceived performance by serving cached resources directly from the user's device.

Server-side caching maintains data on your application servers or dedicated cache infrastructure, providing centralized control over sensitive PayPal API responses and user data. This approach ensures compliance with PCI DSS requirements by keeping payment information secure within your controlled environment.

| Caching type | Use case | Data Sensitivity | Speed | Security Control |

| Client-Side | Static Assets, UI elements | Low | Fastest | Limited |

| Server-Side | API responses, user data | High | Fast | Complete |

For PayPal integrations, use client-side caching for public resources like checkout button images and JavaScript libraries, while implementing server-side caching for sensitive data such as transaction details, user payment methods, and API authentication tokens. Server-side caching becomes mandatory for any data subject to PCI DSS compliance requirements, ensuring sensitive payment information never leaves your secure server environment.

How to Implement Caching for PayPal API Responses

Implementing effective caching for PayPal API responses requires careful planning to balance performance gains with data accuracy and security requirements. Focus on caching stable data while ensuring dynamic information remains fresh and accurate. Progressive web app research identifies static resources, configuration data, and less frequently changing API responses as safe caching candidates, while warning against caching sensitive or dynamic data.

Step 1: Identify Cacheable PayPal Data

Evaluate your PayPal API usage to identify responses suitable for caching:

· Merchant account configuration and settings

· Product catalog information and pricing

· Shipping rate calculations for standard options

· Payment method availability by region

· Static content like terms of service or help text

Step 2: Select Optimal Caching Strategy

Choose caching approaches based on your specific requirements:

· Use cache-aside for flexible control over what gets cached

· Implement write-through for critical data requiring immediate consistency

· Deploy distributed caching for multi-server environments

· Consider edge caching for globally accessed static resources

Step 3: Implement Cache Management

Comprehensive caching guides recommend applying cache invalidation and fallback mechanisms to ensure data accuracy and system reliability. Configure appropriate TTL values based on data volatility, implement fallback mechanisms for cache failures, and establish monitoring systems to track cache hit rates and performance improvements. Test cache behavior thoroughly in staging environments before deploying to production systems handling real PayPal transactions.

For REST API integrations, obtaining an access token is a critical first step for authentication.

Integrate Thoughtfully

Caching and retry logic aren’t just optimization tricks. They represent engineering maturity. A thoughtful checkout integration is one that’s resilient under stress, scalable across markets, and economical to operate. With the right caching and retry strategy in place, your integration won’t just work, it will perform.

Refer to PayPal's API Integration Guide for more information.

Recommended

Developer Day for Fastlane by PayPal: A Masterclass of Innovation

5 min read

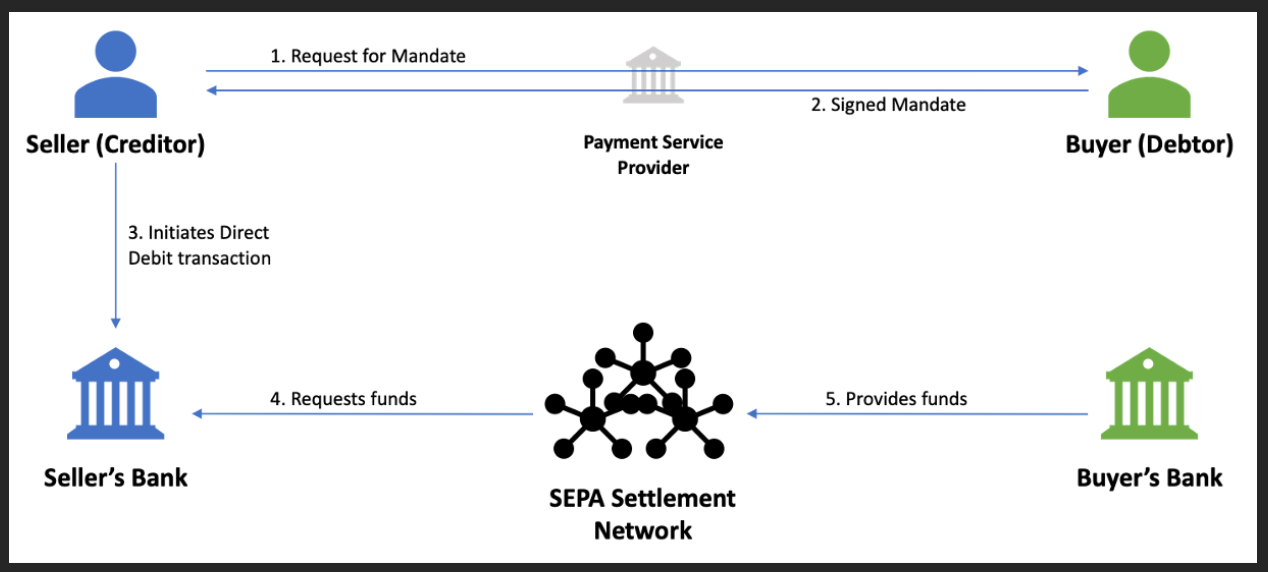

Pay by Bank for E-Commerce | Using Bank Accounts to Make Purchases with SMBs [SEPA]

5 min read

PayPal Dev Days 2025: Building Smarter Commerce

5 min read