Building Responsible AI for Payments and Lending

Oct 22, 2025

7 min read

Artificial Intelligence (AI) has emerged as a transformative force across industries, reshaping how products are designed, services are delivered, and customer experiences are personalized. In financial ecosystems like payments, lending, and financial advice, AI has been pivotal in driving efficiency and innovation. Yet, alongside this evolution comes the pressing need to implement and manage AI responsibly. Responsible AI isn’t merely a technical endeavor, it's a moral commitment to fairness, transparency, accountability, and customer trust.

In this post, we will explore what responsible AI looks like within the context of payments, lending, and financial advice, proposing key principles to guide ethical AI practices and ensure that technological progress benefits all stakeholders equitably.

The Role of AI in Financial Services

AI-enabled solutions are transforming several facets of the financial landscape:

Payments: AI is optimizing payment processing by detecting fraudulent transactions in real time, improving efficiency through automation, and enabling personalized customer experiences.

Lending: AI-driven models are democratizing access to credit by analyzing alternative datasets that traditional credit score systems might overlook, such as mobile phone usage patterns, utility payments, and social media activity.

Financial Advice: Robo-advisors are helping individuals plan their finances by leveraging AI to analyze spending habits, set financial goals, and recommend investment strategies.

While these capabilities are promising, they are not without challenges. Missteps in AI deployment can amplify biases, erode trust, or even compromise security.

Principles of Responsible AI in Financial Ecosystems

To ensure responsible deployment of AI in financial services, organizations should adhere to guiding principles that prioritize ethical considerations alongside technical sophistication:

1. Fairness and Equity

AI systems often learn from historical data, which can carry inherent biases. For example, in lending, a model trained on biased datasets might perpetuate discriminatory practices, denying credit to individuals from marginalized communities.

Responsible AI requires implementing methods to identify and mitigate algorithmic bias. This includes:

Conducting regular audits of training datasets to ensure inclusivity.

Applying fairness metrics during model evaluation to assess whether outcomes are equitable for diverse demographic groups.

Leveraging explainable AI tools to understand decision-making processes and identify areas of bias.

Financial institutions must endeavor to design models that extend opportunities to underserved and underrepresented populations while reducing the likelihood of discrimination.

2. Transparency and Explainability

Financial decisions have life-altering consequences, a rejected loan application or an inaccurate financial prediction can deeply impact an individual's future. To maintain the trust of customers, AI systems must be transparent and explainable.

The use of explainable AI techniques allows institutions to:

Provide clear rationales for decisions, such as why a particular payment was flagged as fraudulent or why a loan application was denied.

Empower customers to understand how their data is used and its impact on financial outcomes.

Transparency fosters trust and ensures compliance with regulatory standards. Customers should feel confident not only that AI systems are working in their best interests but also that they can challenge a decision if necessary.

3. Accountability and Governance

AI systems are complex and often operate autonomously, which poses challenges when errors occur. Responsible AI emphasizes accountability through proper governance frameworks that assign clear ownership of decisions made by algorithms.

Organizations can implement accountability through:

Creating oversight committees to review AI deployment and operational decisions.

Offering recourse mechanisms, such as appeals or manual review processes, for customers negatively impacted by AI decisions.

Aligning AI usage with industry regulations like the General Data Protection Regulation (GDPR) to ensure compliance.

Governance ensures not only the operational integrity of AI systems but also ethical responsibility for their potential impacts.

4. Privacy and Security

Payments, lending, and financial advice fundamentally depend on access to sensitive consumer data. Protecting this data is paramount in AI applications. Responsible AI mandates robust privacy protocols, such as:

Encrypting data at rest and in transit to prevent unauthorized access.

Implementing differential privacy techniques to mask individual data points in aggregated datasets.

Informing customers about data usage practices in simple, accessible language.

Moreover, AI systems must be resilient against cyberattacks, ensuring that vulnerabilities are proactively identified and mitigated before they can be exploited.

Real-world Examples of Responsible AI Initiatives

Several institutions are pioneering responsible AI practices in financial services:

AI in Payments: PayPal deploys advanced fraud detection models that not only identify abnormal payment patterns but also adapt to emerging threats in real time, balancing security with user convenience.

AI in Lending: Fintech firms are using alternative credit scoring models to offer loans to first-time borrowers or those with limited credit histories. These models are subjected to fairness checks to avoid perpetuating bias against traditionally underserved groups.

AI in Financial Advice: Robo-advisory platforms ensure that their recommendations align with individual risk profiles and financial goals, actively preventing overpromising or underestimating risks.

These examples illustrate how applying responsible AI principles benefits both customers and organizations, setting benchmarks for ethical practices within the industry.

Challenges in Implementing Responsible AI

Despite the potential benefits, implementing responsible AI isn’t without hurdles:

Data Quality: Ensuring datasets are unbiased and representative remains an ongoing challenge.

Operational Costs: Creating governance frameworks and explainable AI models requires significant investment in resources and expertise.

Regulatory Uncertainty: Evolving global regulations around AI and data usage necessitate constant monitoring and adaptation, increasing complexity for financial institutions.

Responsible AI thus involves a balance between technological innovation, ethical considerations, cost implications, and regulatory compliance.

Building Ethical Foundations for AI in Finance

As payments, lending, and financial advice continue to evolve under the aegis of AI, stakeholders must recognize their shared responsibility to uphold ethics, transparency, and accountability. Responsible AI isn't simply about compliance, it's about creating systems that equitably serve all individuals while prioritizing fairness, security, and trust.

PayPal has an unprecedented opportunity to set industry standards by integrating responsible AI into their operations, from fraud detection algorithms to credit scoring systems. Together, the industry can redefine what it means to deliver value responsibly, ensuring financial inclusion and equitable access to opportunities across the globe.

Responsible AI isn't a choice, it's the cornerstone of sustainable innovation for a better, fairer financial future.

Recommended

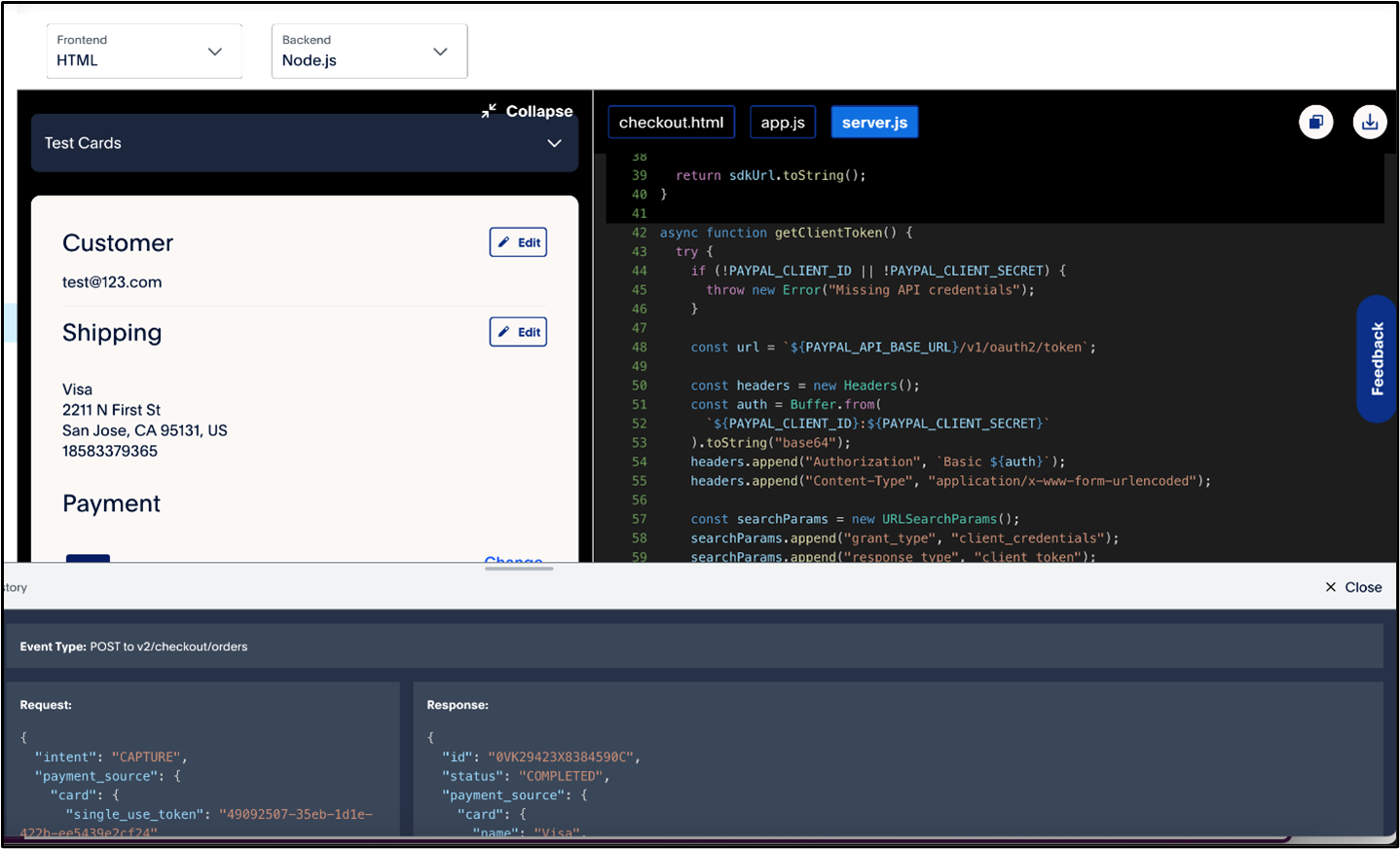

A Faster Guest Checkout: How to Integrate Fastlane by PayPal

8 min read

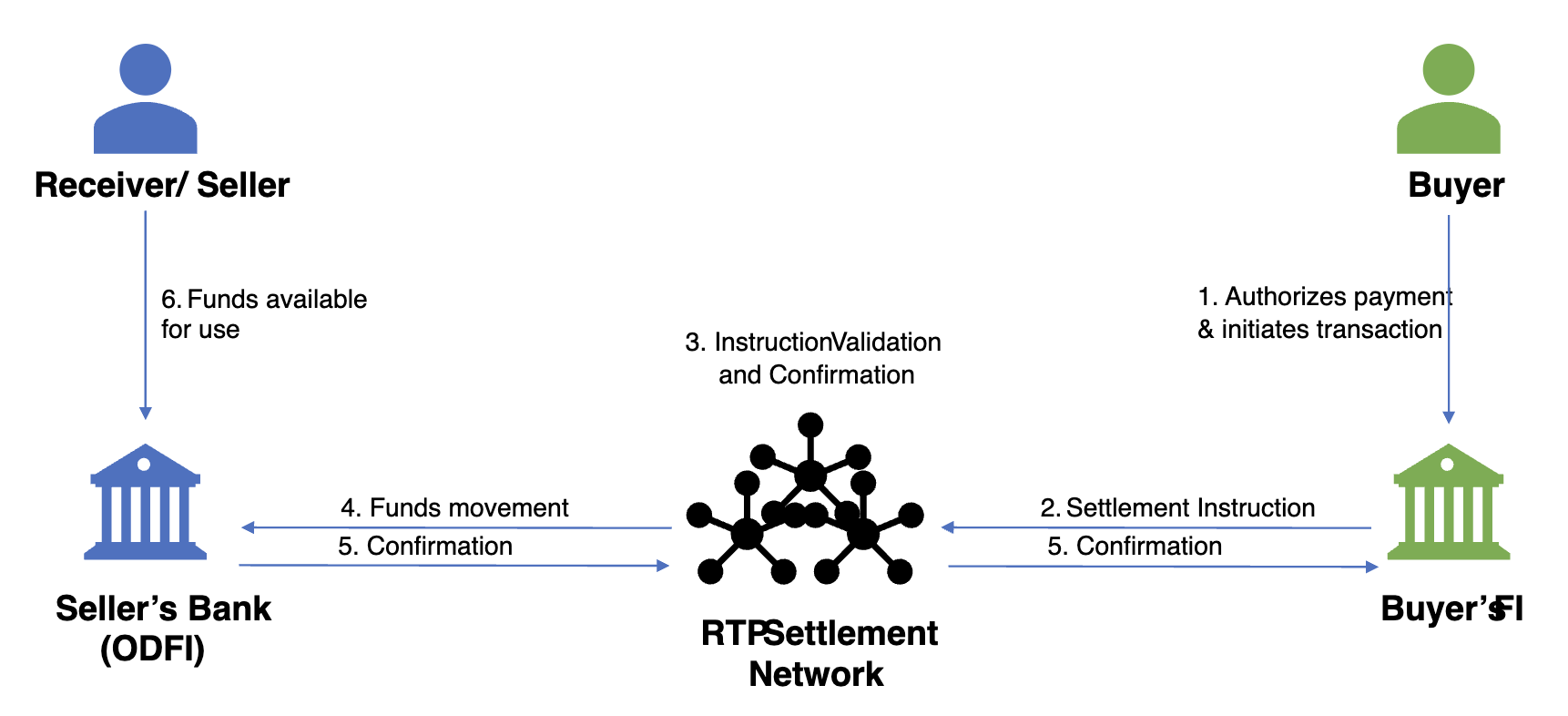

Exploring the Growth of Real Time Payment Systems

5 min read

Pay by Bank for E-Commerce | Using Bank Accounts to Make Purchases with SMBs [ACH]

5 min read