PayPal's LLM Integration Quickstart Guide

Aug 15, 2025

2 min read

In the evolving AI landscape, Large Language Models (LLMs) aren’t just for generating text, they’re increasingly empowered to take real actions. PayPal’s Model Context Protocol (MCP) server is enabling LLM agents to understand and trigger payment flows using natural language. The latest quickstart guide shows how to connect LLMs like Anthropic or OpenAI to PayPal MCP server, making payments feel as easy as talking.

Before your LLM can act on PayPal’s behalf, it needs a secure way to authenticate. The guide walks you through obtaining an access token, either via a cURL request or programmatically through Python or TypeScript. The access token is generated using your PayPal client ID and secret. Once you have your access token, you're ready to integrate with the LLM of your choice.

Developers can enable LLM agents to understand and execute PayPal operations using natural language; whether invoicing, processing payments, or managing financial tasks. The MCP server supports LLMs from both Anthropic and OpenAI, offering teams the flexibility to choose the AI tool that fits their needs.

Get started today

Get started today and explore how with PayPal’s LLM integration quickstart guide, developers can shift from crafting transactional UIs to creating conversational commerce experiences. It’s about making payments feel as effortless as asking a question. PayPal’s MCP server paves the way, by turning language into real financial actions, seamlessly embedded into intelligent AI workflows.

For more information visit PayPal.ai.

Recommended

A Faster Guest Checkout: How to Integrate Fastlane by PayPal

8 min read

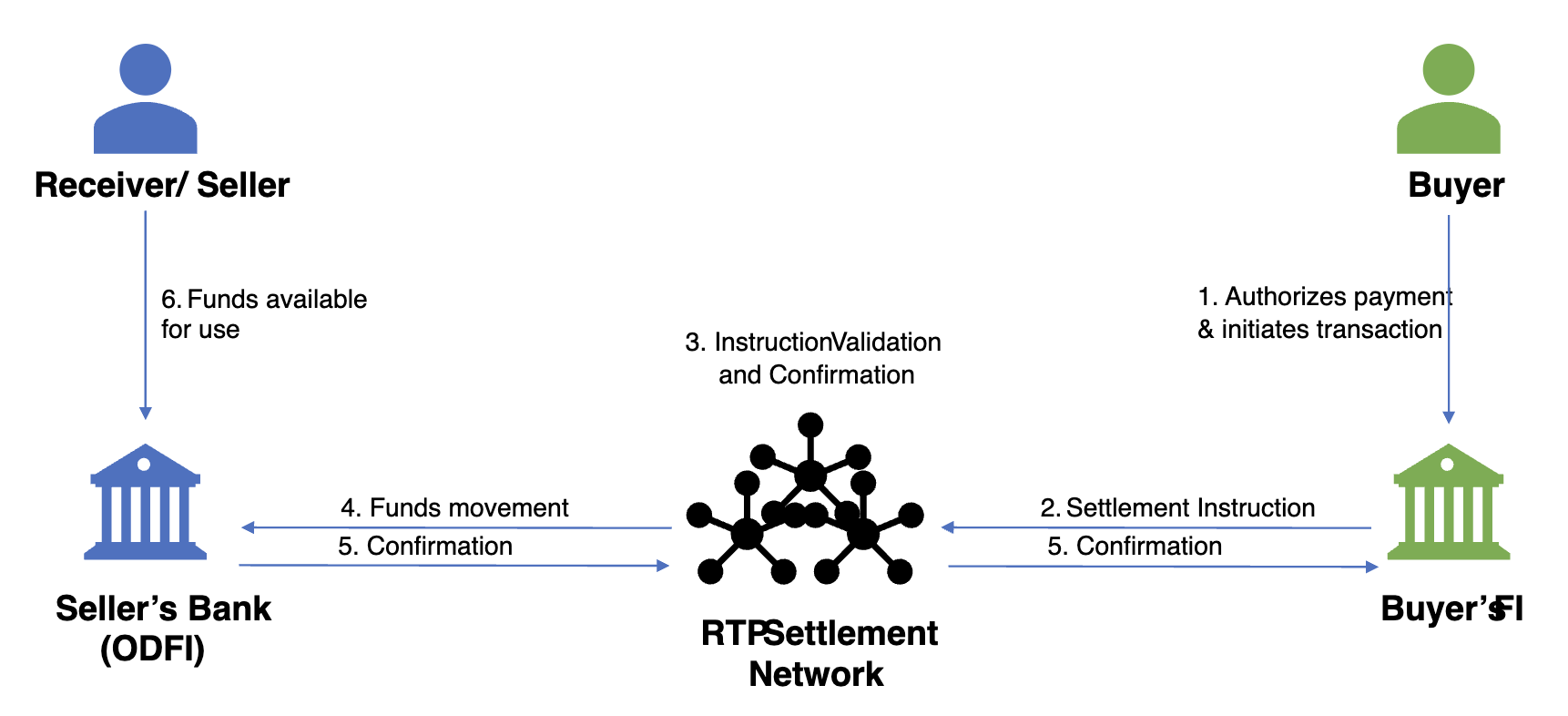

Exploring the Growth of Real Time Payment Systems

5 min read

Pay by Bank for E-Commerce | Using Bank Accounts to Make Purchases with SMBs [ACH]

5 min read